FAISS and HSNW are other algorithms that good to try in improving the performance to find the nearest neighbors. Molde de boina inglesaAn alternative approach is to use the NLP technique of TF-IDF combined with K-Nearest Neighbors and n-grams to find the matched strings. MLkNN builds uses k-NearestNeighbors find nearest examples to a test class and uses Bayesian inference to select assigned labels. kNN classification method adapted for multi-label classification. In case of interviews, you will get such data to hide the identity of the customer.Multilabel k Nearest Neighbours¶ class (k=10, s=1.0, ignore_first_neighbours=0) ¶. KneighborsClassifier: KNN Python Example GitHub Repo: KNN GitHub Repo Data source used: GitHub of Data Source In K-nearest neighbors algorithm most of the time you don't really know about the meaning of the input parameters or the classification classes available. Super Fast String Matching in Python (2019).

In R there a package called KKNN and it automatically allows you to specify the maximum K that you want to choose and selects the best K baseb on leave one out CV.Īn alternative approach is to use the NLP technique of TF-IDF combined with K-Nearest Neighbors and n-grams to find the matched strings. I have recently used weighted KNN for classification, it is more robust than the tradition KNN.

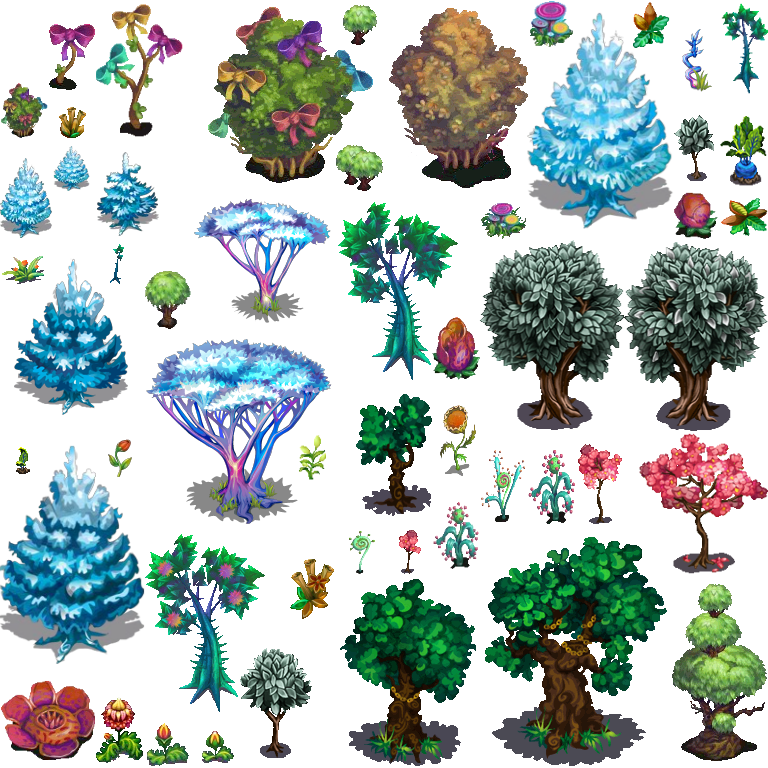

RPG MAKER MV TREES HOW TO

This code shows how to use a k-nearest neighbor classifier to find the nearest neighbor to a new incoming data point.

Now, for a quick-and-dirty example of using the k-nearest neighbor algorithm in Python, check out the code below. First start by launching the Jupyter Notebook / IPython application that was installed with Anaconda.It is considered as one of the simplest algorithms in Machine Learning. KNN or K-nearest neighbors is a non-parametric learning method in Machine Learning, mainly used for classification and regression techniques.

RPG MAKER MV TREES PROFESSIONAL

Here's a visualization of the K-Nearest Neighbors algorithm. It calculates the distance between the test data and the input and gives the prediction according.

K number of nearest points around the data point to be predicted are taken into consideration. The general idea behind K-nearest neighbors (KNN) is that data points are considered to belong to the class with which it shares the most number of common points in terms of its distance. These points are typically represented by N 0.The KNN classifier then computes the conditional probability for class j as the fraction of points in observations in N 0. Provided a positive integer K and a test observation of, the classifier identifies the K points in the data that are closest to x 0.Therefore if K is 5, then the five closest observations to observation x 0 are identified.

0 kommentar(er)

0 kommentar(er)